Meta Company, in collaboration with researchers from the University of Chicago and the University of California, Berkeley, has developed a new framework called DreamGym, aimed at addressing the high costs, complex infrastructure, and unreliable feedback associated with training large language model (LLM) agents using reinforcement learning (RL). DreamGym trains agents by simulating RL environments, enabling them to efficiently handle complex applications.

DreamGym can dynamically adjust task difficulty during training, ensuring that agents gradually learn to solve more challenging problems. The research team's experiments show that DreamGym significantly improves the effectiveness of RL training, both in fully simulated environments and in scenarios where simulated learning is applied to the real world. In environments where RL can be applied but is costly, DreamGym can achieve performance comparable to popular algorithms by relying solely on synthetic interactions, greatly reducing the cost of data collection and environment interaction.

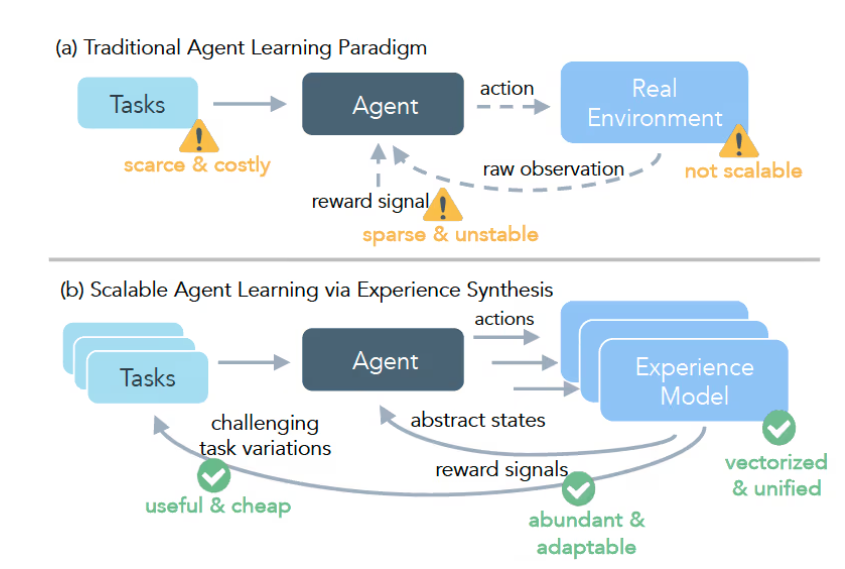

Training LLM agents using reinforcement learning faces various challenges. Real-world applications often involve long operation sequences and sparse feedback, and agents only receive positive signals after a series of correct operations. Additionally, collecting sufficiently diverse and verified data is expensive, often requiring human experts for verification and annotation. To address these issues, DreamGym provides an effective and safe training path.

The core of the DreamGym framework consists of three parts: first, the "reasoning-based experience model," which converts the dynamics of the target environment into a text space, simulating the application environment. Second, the "experience replay buffer," acting as a dynamic memory bank, helps guide the prediction of the experience model, ensuring the diversity of synthetic experiences. Third, the "curriculum task generator," which automatically generates new and more challenging tasks based on the agent's performance. These three components work together to form a closed system, achieving efficient agent training.

The researchers conducted multiple benchmark tests on DreamGym, including areas such as e-commerce, sensory control, and real web interactions. The results showed that DreamGym performed superiorly in various tasks, especially in the WebArena environment, where the success rate of the trained agents exceeded baseline methods by over 30%. Through this approach, DreamGym provides a feasible RL training solution for fields that were previously difficult to achieve.

Key points:

🌟 DreamGym trains AI agents through simulated environments, reducing the cost and risk of RL training.

🚀 The framework dynamically adjusts task difficulty, allowing agents to gradually solve more complex problems.

💡 Experimental results show that DreamGym performs better than traditional training methods in various application areas.