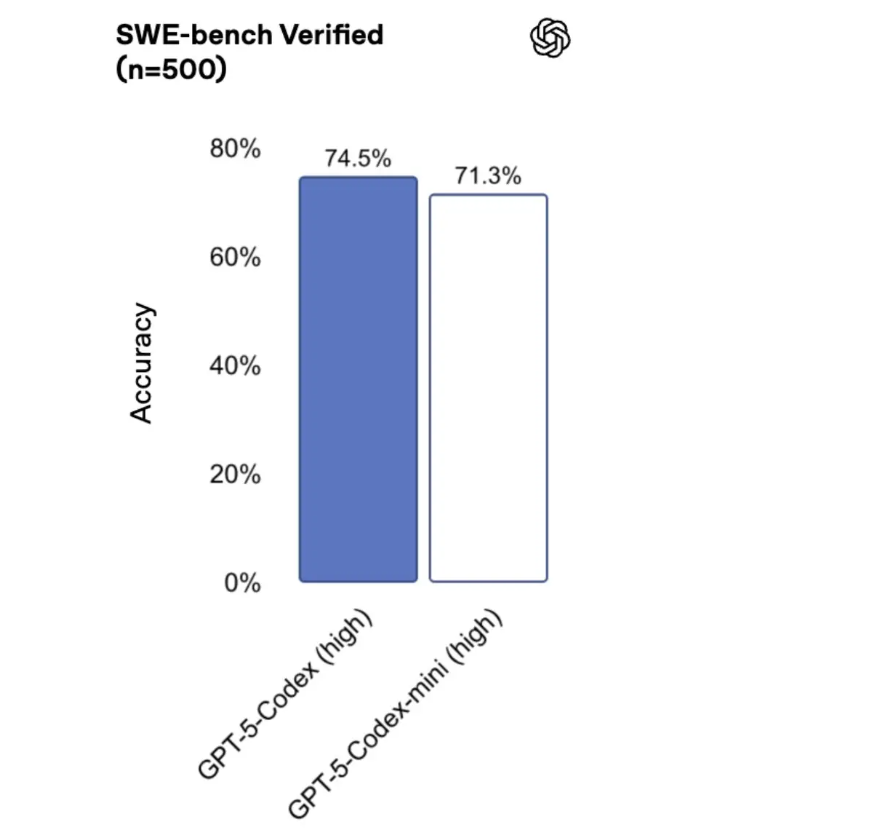

Recently, OpenAI announced the official launch of a new lightweight model - GPT-5Codex Mini. This model is designed to provide more efficient and cost-effective code generation capabilities, aiming to offer developers more flexible options. At the same time, OpenAI has also expanded the usage quota for Codex, allowing users to access more call volumes under their existing subscription plans and credit systems.

Regarding service level adjustments, OpenAI has also brought significant changes. For ChatGPT Plus, Business, and Edu users, the rate limits have been increased by about 50%, which means users will experience smoother service when processing requests. For ChatGPT Pro and Enterprise users, they will enjoy priority processing, further reducing response times and ensuring reliability at critical moments.

OpenAI also recommends that developers choose the newly released GPT-5Codex Mini model when handling simple software engineering tasks or when approaching the call rate limit. When users' call volume approaches 90% of their quota, Codex will intelligently recommend switching to this new model. GPT-5Codex Mini now supports command line interface (CLI) and integrated development environment (IDE) extensions, and the API interface will be available soon, making it easier for more developers to use.

In this update, the OpenAI team optimized the underlying system of Codex to ensure a more consistent experience for developers. In previous versions, actual call volume might fluctuate due to traffic load and routing allocation. The optimization measures taken this time effectively resolved this issue, allowing users to maintain a stable service experience throughout the day.

This series of updates from OpenAI undoubtedly provides developers with more choices and a more efficient workflow, further promoting the application of AI in the field of programming.