Recently, Character AI has jointly launched a new audio-visual synchronization video generation technology called Ovi with a research team from Yale University. This open-source project marks a major breakthrough in audio and video generation technology, breaking away from traditional methods of audio-visual production.

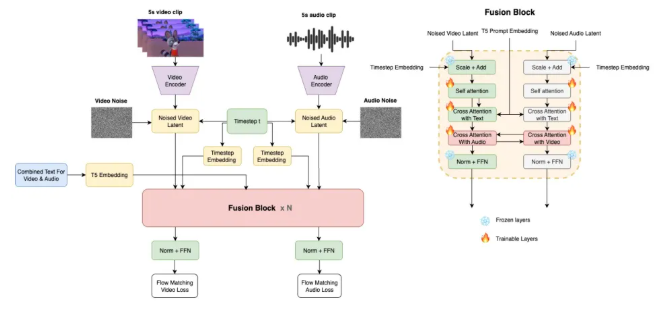

Ovi adopts an innovative dual backbone cross-modal fusion architecture, treating audio and video as an inseparable whole. In this system, the processing of audio and video is parallel, with deep interaction between them, achieving perfect synchronization of audio and video. This design concept completely changes the previous practice of generating images first and then adding sound or vice versa, solving the problem of audio-visual desynchronization.

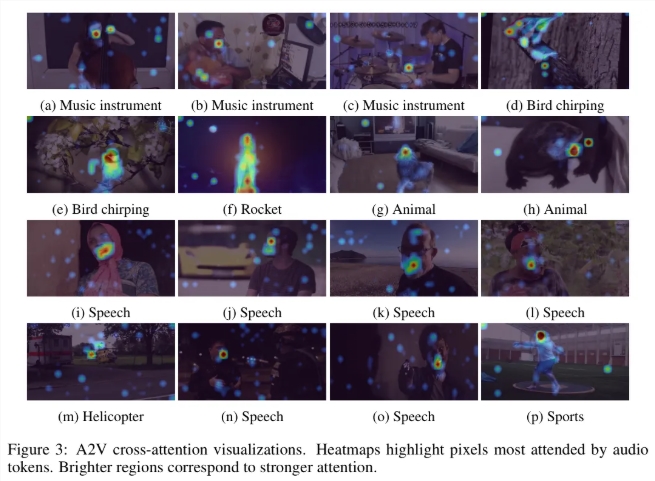

In the architecture of Ovi, there are two identical branches, each responsible for processing video and audio. These two branches use the same diffusion transformer architecture, allowing direct interaction between audio and video during generation, eliminating unnecessary parameters and computational overhead. This real-time information exchange enables Ovi to accurately learn the correspondence between audio and video, such as precise matching between lip movements and pronunciation.

To ensure precise temporal alignment between audio and video, Ovi introduces a technique called rotational position embedding. Through mathematical scaling, the time steps of audio and video are perfectly matched, ensuring that they appear synchronously during generation. Additionally, Ovi uses a unified text prompt strategy when processing user input, to improve the accuracy and richness of the generated results.

In terms of dataset construction, the Ovi team designed a complex processing workflow to ensure the diversity and high quality of the training data. They combined a dataset of audio-video pairs with a pure audio dataset to provide a comprehensive learning foundation for the model. This rigorous training approach laid a solid foundation for the success of Ovi.

github:https://github.com/character-ai/Ovi

Key Points:

🌟 Ovi is an open-source audio-visual synchronization video generation technology developed by Character AI and Yale University.

🎥 It uses a dual backbone cross-modal fusion architecture to achieve real-time interaction and perfect synchronization between audio and video.

📊 The team built a high-quality and diverse dataset to support the training and application of Ovi.