Meta's artificial intelligence research team collaborated with the National University of Singapore to develop a new reinforcement learning framework called "Self-Improvement in Self-Play Environments" (SPICE). This framework enables two AI agents to compete against each other, creating self-improving challenges that allow them to gradually enhance their capabilities without human supervision. Currently, this framework is still in the proof-of-concept stage, but it has the potential to lay the foundation for future AI systems capable of dynamically adapting to environments, making them more robust when facing the unpredictability of the real world.

The goal of self-improving AI is to enable systems to enhance their abilities through interaction with the environment. Traditional methods often rely on human-curated question sets and reward mechanisms, which makes scalability difficult. The self-play approach allows models to improve by competing with each other. However, existing self-play methods face some limitations when applied to language models, such as fact errors in generated questions and answers piling up, leading to "hallucination" phenomena. Additionally, when the question generator and the answerer share the same knowledge base, they cannot generate new challenges and tend to fall into repetitive patterns.

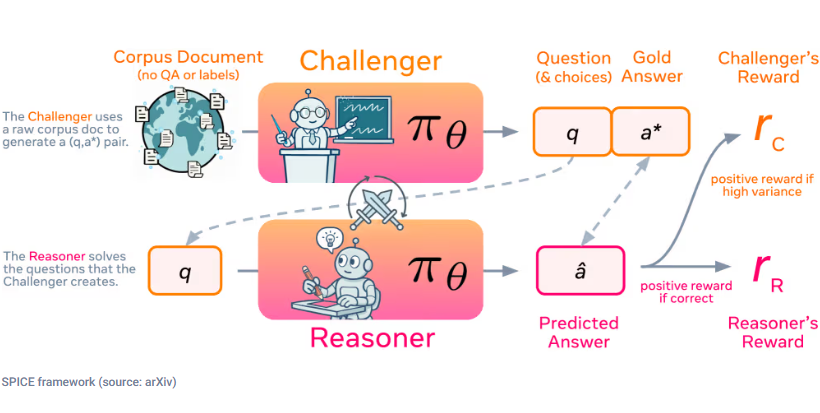

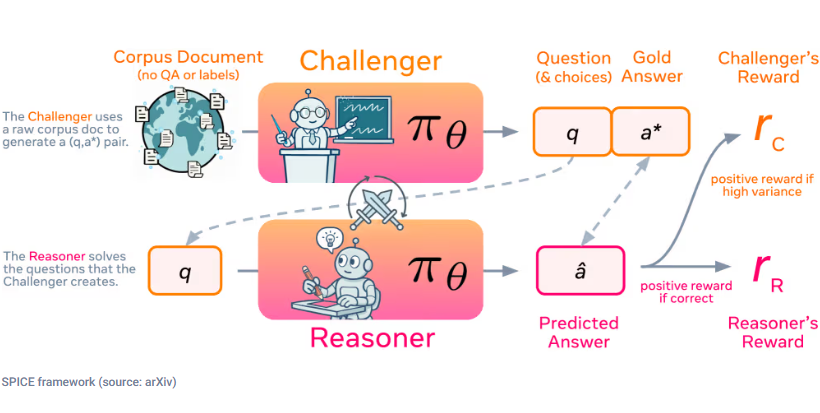

SPICE framework adopts an innovative self-play mechanism where one model takes on two roles: the "challenger" creates difficult questions from a large number of documents, while the "reasoner" attempts to solve these questions without access to the source documents. This setup breaks information symmetry, preventing the reasoner from using the knowledge the challenger used to generate the questions, thereby reducing errors.

This adversarial dynamic creates an automated curriculum, where the challenger is rewarded for generating diverse and challenging problems at the edge of the reasoner's ability, and the reasoner is rewarded for correctly answering them. This reciprocal interaction promotes the mutual growth of both roles, pushing them to continuously discover and overcome new challenges. Since the system uses original documents rather than predefined question-answer pairs, it can generate various task formats suitable for different fields, breaking the previous method's limitations in specific domains.

Researchers evaluated multiple foundational models and found that SPICE performed well in mathematical and general reasoning tasks, outperforming other baseline models. This finding indicates that the reasoning abilities cultivated through corpus-based self-play can be effectively transferred to different models, heralding a new era of self-improving reasoning methods.

Paper: https://arxiv.org/abs/2510.24684

Key Points:

✅ The SPICE framework enables AI systems to gradually improve their reasoning abilities in an unsupervised manner through self-play.

✅ Separating the roles of challenger and reasoner breaks information symmetry and reduces errors.

✅ SPICE performed excellently in multiple model tests, demonstrating its broad applicability and effectiveness.