As AI image generation enters the "professional-grade" arena, Adobe is redefining the creative workflow with a comprehensive upgrade. On October 29, Adobe officially released its latest generation image generation model - Firefly Image5, and simultaneously introduced several groundbreaking features: native 4 million pixel output, layered prompt editing, custom artistic style models, AI voice and music generation, fully integrating image, video, and audio into an AI creation loop.

Image5: A Leap from "Sufficient" to "Professional-Grade"

Compared to the previous version that only supported 1 million pixel native generation (requiring post-processing to reach 4 million pixels), Firefly Image5 can directly generate high-quality images up to 4 million pixels (approximately 2240×1792), with significantly improved detail sharpness and color representation. Especially in character rendering, Adobe has optimized facial structure, body proportions, and lighting logic, greatly reducing common issues like "AI deformed hands," making it closer to professional illustration and commercial photography standards.

The more revolutionary feature is its layered editing capability: the model automatically identifies different objects in the image as separate layers, allowing users to precisely adjust individual elements through natural language instructions (such as "change the hat to red" or "zoom in on the background building") or traditional tools (rotation, scaling), while the system intelligently maintains the overall lighting consistency and detail integrity of the scene, truly achieving "what you imagine is what you get" non-destructive editing.

Creator-Specific AI: One-Click Training of Personal Style Models

To meet the high demand for style consistency among professional artists, Adobe introduced a custom model feature (Closed Beta). Users simply need to drag and drop their illustrations, photos, or sketches, and the system will train a personalized image generation model based on these assets, ensuring that the output content fully aligns with their artistic language. This capability will greatly improve work efficiency in brand visuals, character design, IP development, and other scenarios, allowing AI to truly become a "digital apprentice."

Multi-Modal Creation Platform: Integrated Image + Video + Audio

The new Firefly website has been restructured into a multi-modal creation hub:

A unified prompt box supports seamless switching between image and video generation;

A model selector integrates Adobe's self-developed models as well as third-party engines such as OpenAI, Google, Runway, Topaz, and Flux;

The homepage aggregates personal files, historical generation records, and quick access to Creative Cloud applications;

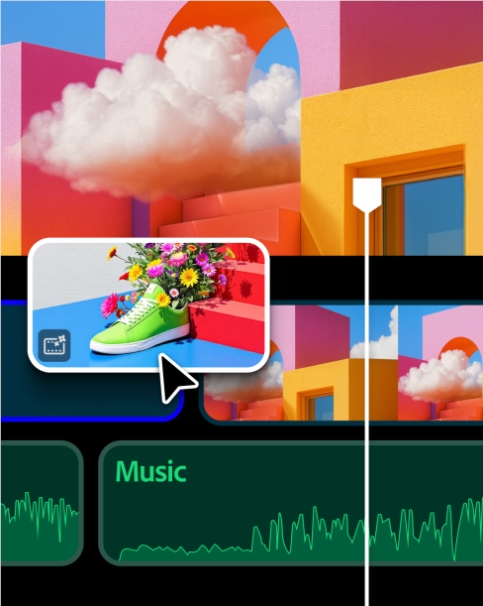

Video tools now include a timeline and layered editing (Private Beta), moving towards a professional-level AI video workflow.

Regarding audio, Firefly now supports generating complete soundtracks and voiceover narration through AI prompts. The underlying technology uses the ElevenLabs voice model and introduces a "word cloud-style prompt" feature—users can quickly build complex instructions by clicking keywords, lowering the barrier to entry for creation.

Towards "Next-Generation Creators": Breaking Traditional Workflow Constraints

Alexandru Costin, Vice President of Adobe's Generative AI, stated that Firefly's target users are "GenAI-native creators"—those who are not bound by traditional software logic and prefer to deeply integrate AI throughout the entire process. Therefore, Firefly has managed to break away from the interaction habits of classic tools like Photoshop, boldly reconfiguring the interface and features to create a more intuitive creative experience suitable for the AI era.